Transformers

Transformers in NLP are a type of deep learning model that uses self-attention mechanisms to analyze and process natural language data. They are encoder-decoder models that can be used for many applications, including machine translation

Let's begin by discussing datasets. Datasets are collections of data used by AI systems for analysis

or, more accurately, for training. This data can come in various forms, such as CSV files, web pages, PDFs, images,

videos, or even audio recordings. Datasets can grow quite large because, unlike humans, computers need

many examples to identify patterns or make comparisons.

For example, if you wanted to use AI to identify the type of animal in a photo or create a resume template, lots of examples of images or documents

would be needed for Generative AI to see a common pattern in order to generate an output, or response. AI takes our data, converts it into a bunch of

numbers (called vectors) and then looks at the numbers to see a common pattern. Once a pattern is found, an AI model will create a response.

Now, lets talk about an concept in AI called Attention Mechanism In AI we take in lots of data from our datasets in order to analyze them, but do we need to

study every single data equally? When an athlete trains for an event, they may focus on one aspect of their training more then another. For example, maybe their diet

is more focused then their running routine, or type of shoes they use. In AI instead of processing the entire input data equally, the attention mechanism helps the model

"pay more attention" to certain parts of the input that are more important for the task at hand.

Take for example translating from English to Portuguese, an AI model might focus more on specific words in the sentence that are most crucial for understanding the

meaning. Instead of processing the entire sentence equally, it dynamically decides which words to focus on, based on their relevance to the translation. Attention Mechanism

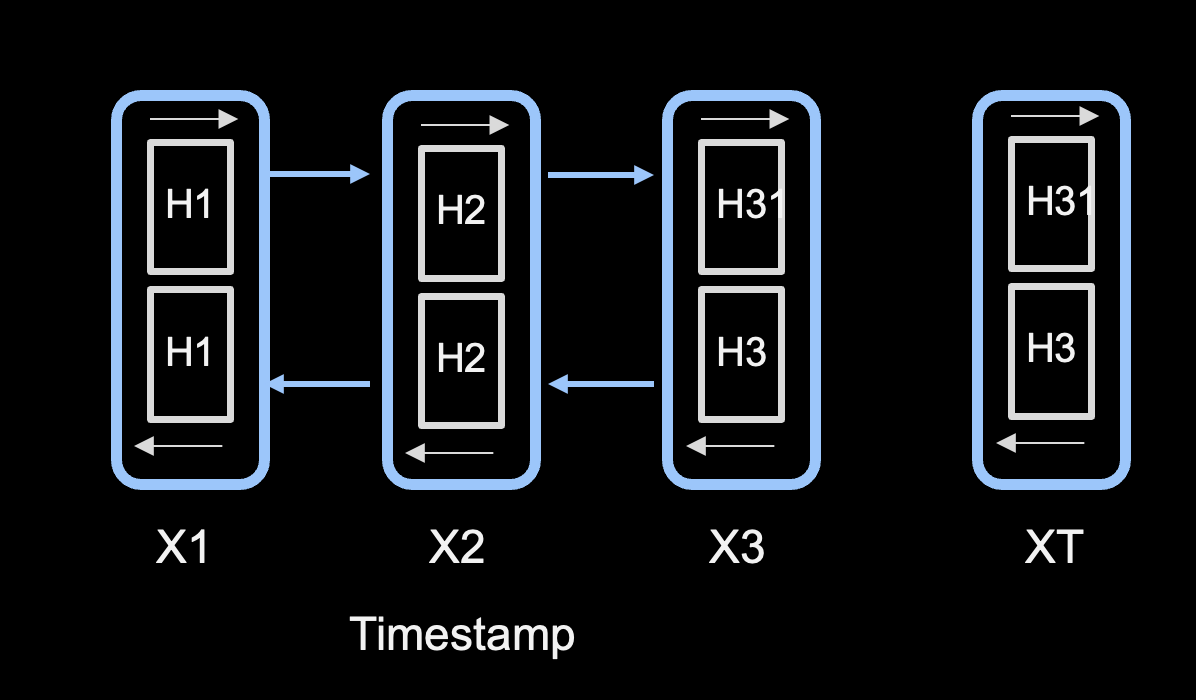

processes words in an order through an encoder and decoder process. Look at the diagram below:

This illustration shows how a model processes words in a sentence from left to right, based on a timestamp, X1,X2,X3. Each word is processed in order and not in parallel. This causes a problem with huge datasets, because the training process is not scalable. With every word processed sequentially, long lag times can occur based on the length of a sentence. The diagram architecture is referred to as Long Short-Term Memory, or LSTM, which is a type of recurrent neural network (RNN) used within both the encoder and decoder components to effectively process sequential data, To overcome the scalability problem with the encoder and decoder model, Transformers use a model process called Self Attention. With self attention, all words are sent in parallel to be processed, and therefore become highly sclable for large datasets, such as ones used with Large Language Models.

You may have heard of transformers types such as BERT and GPT. These transformers allow companies to use models on huge amounts of data without having to build their own. These models leverage transfer learning - a model that has been trained on a large dataset (often a general one) and fine-tune it for a specific task. There are also multi model tasks such as DALL-E that work with text and images.

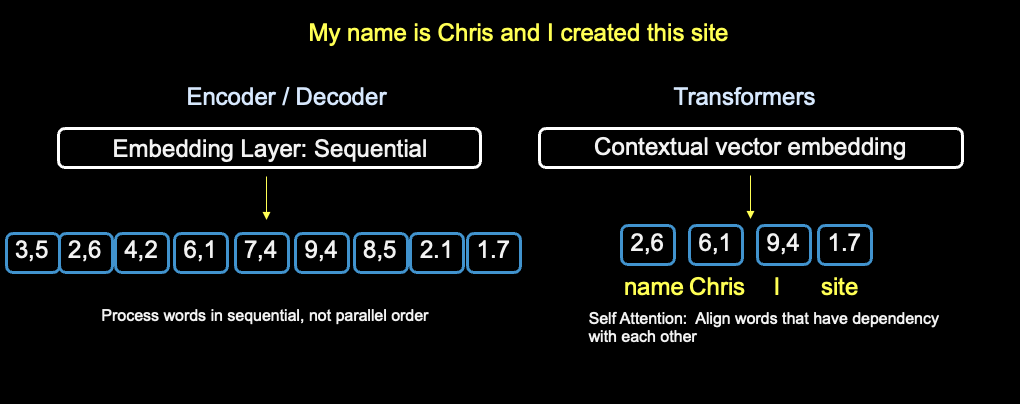

Lets look at an example and see how Transformers work. The Sentence below show the conversion into vectors using encoder/decoder and transformers. With the encoder / decoder process, the sequential model is used. Each word is passed in the sentence and converted to a number. Each vector under the embedding layer represents a word in the sentence, there are a total of 9 words, and a total of 9 vector units.

Notice the difference between sequential encoder / decoder and Transformers. On the right of the diagram contextual vector embedding is used. With transformers

words that are identified with a dependency are selected for conversion. Chris has a dependency of name,

and I, site could have a dependency of Chris. Contextual embedding maps the context of words as

they relate, or depend on each other.

This technique is known as soft attention and is

one reason why transformers are efficient for use with Large Language Models, and use cases that involve large amounts of data. By applying self-attention, the transformer model

can capture the dependencies between different words in the input sequence and learn to focus on the most relevant words for each position.

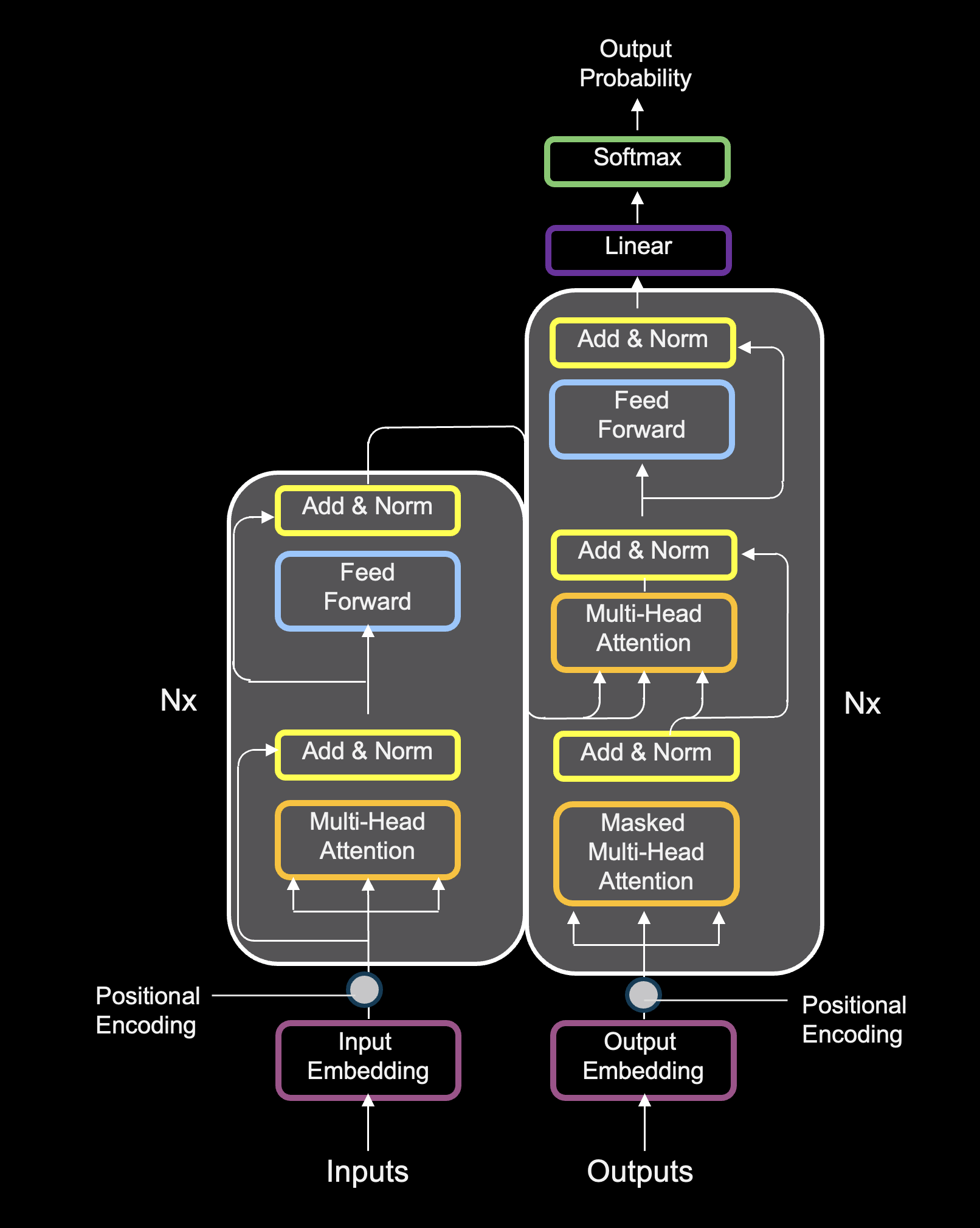

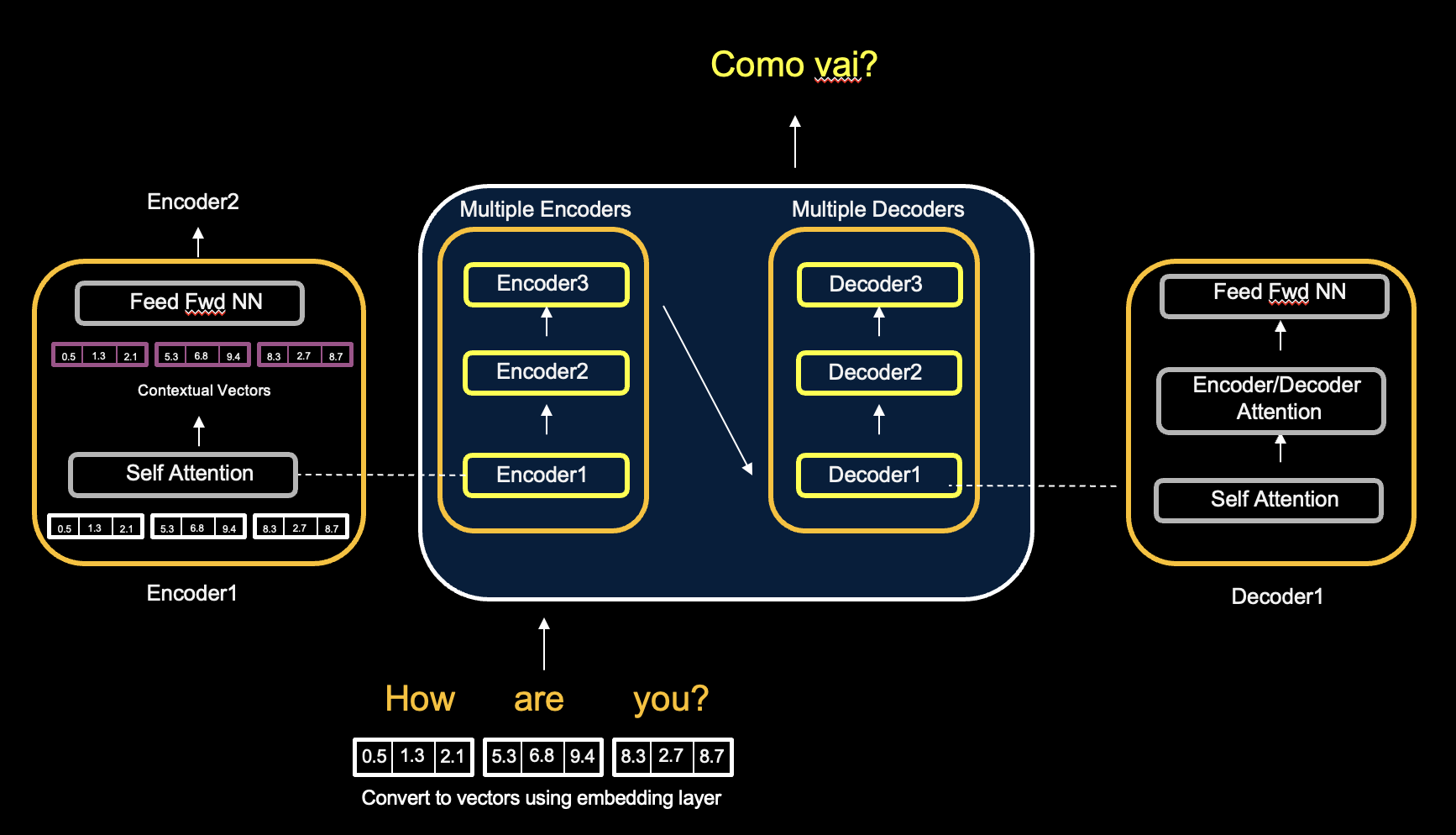

Lets now take a closer look at the transformer architecture. I'll use the example of translating english to portuguese. In the diagram to the right i show

multiple instances of encoders and decoders. This is to enhance the transformers ability to capture complex patterns in data.

Transformers use encoders and

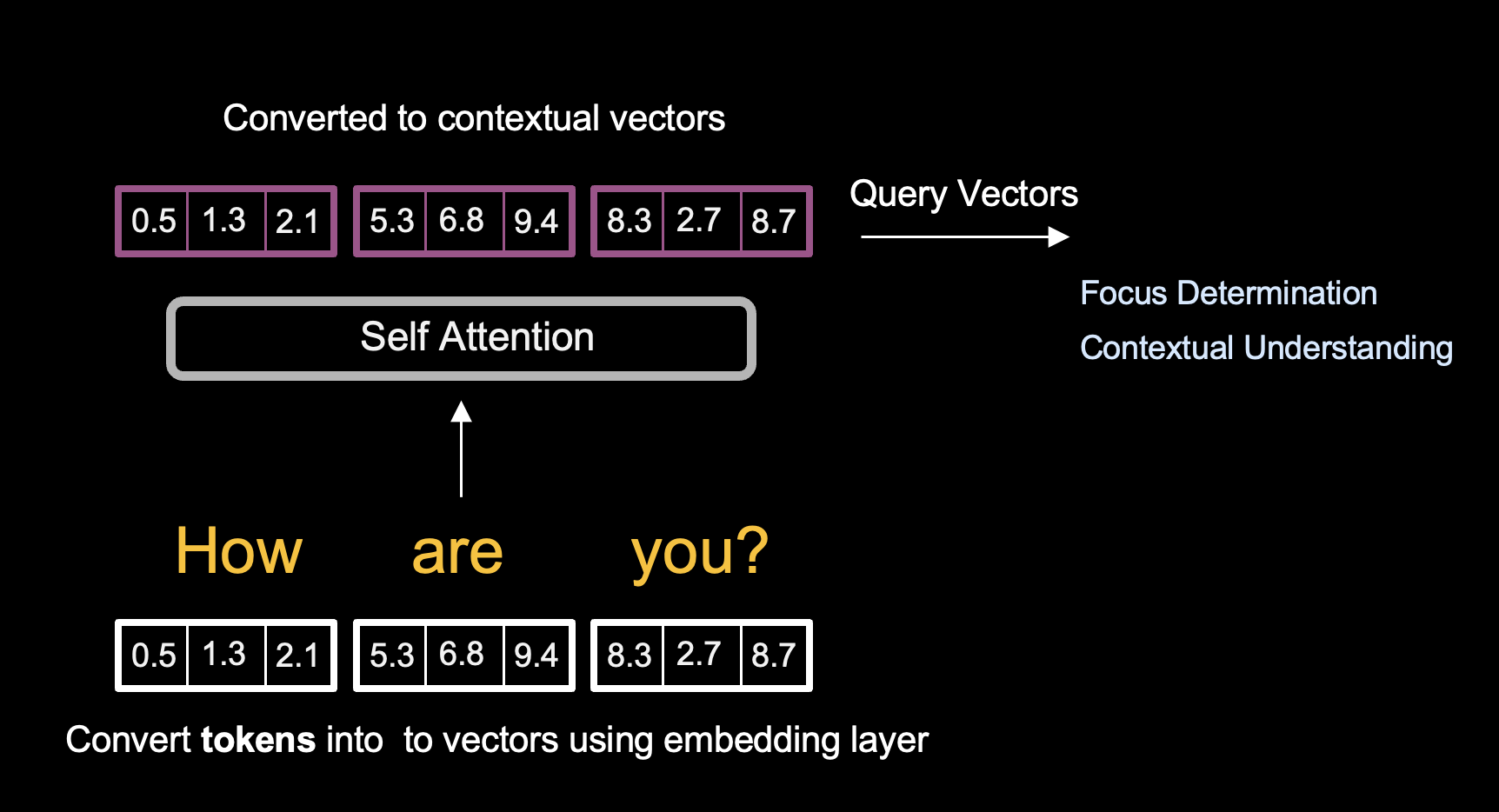

decoders, but its not in a sequential pattern. As i described earlier, self attention is used to take in the fixed vector input of "How are you?" in parallel, and converts it

to a different set of vectors using contextual embedding.

Recall contextual vector embedding selects specific words in a sentence that has a dependency.

Once Self attention has converted the input vectors into contextual vectors, the numbers are passed to the Feed Fwd Neural Network, and then to the next

enocoder. In the diagram i show 3 encoders / decoders, but transformer architectures could have 6 or more!

In Summary, Self attention takes a fixed vector input,

and converts it to a contextual vector based on word dependency, and passes the new set of vectors to the Neural Network for further

processing with other encoders. The more processing done with encoders, the more accurate the model will be in its decoder output to match numbers from a word in english, to

a word in portugeuse with the same meaning, or translation!

Now, you may be wondering how does Self Attention know which word has a dependency in order

to do contextual virtual embedding? I'll cover that here but first, lets discuss tokens. So far I have talked about vectors as words converted to numbers. However, you may hear the term tokens

when researching or discussion AI.

A vector is a numerical representation of a token. So, just for clarity, when i reference input vectors in this write up, im referring to tokens

converted into numbers.

Within our input vectors, or tokens converted into numbers, there are three important inputs models compute for contextual embedding. They are: queries, keys and vector values.

A Query vector represents the token

to calculate attention. This helps determine the importance of other tokens (or words) in the context of the current token, (or word being compared). Queries use an importance called Focus Determination and Contextual understanding.

Focus determination helps the model decide which parts of the sequence to focus on for each specific token in the conversion. By calculating the soft attention (aka dot-product)

between a query vector and all key vectors, the model can assess how much attention (or importance) to give to each token in a sentence as it relates to a current token being converted.

Contextual understanding helps with understanding the relationship between the current token (or word) and the rest of the sequence (or sentence) to capture dependencies and context.

The key value is number models use as part of contextual embedding is used to represent all tokens in the sequence and compare them to the query vectors to calculate an

attention score to determine accuracy. This is one reason why multiple encoders and decoders is used in the transformer architecture,

Value Vectors hold the information that will be aggregated to form the output of the attention mechanism. In other words, value vectors

contain the data using weights the model uses to compute an attention score for accuracy. The weighted values are passed to the neural network where the model

preserves and aggregates relevant context from the entire sequence, which is used in use cases like translation, summarization and more!